The new data technologies, along with legacy infrastructure, are driving market-driven innovations like personalized offers, real-time alerts, and predictive maintenance. However, these technical additions - ranging from data lakes to analytics platforms to stream processing and data mesh —have increased the complexity of data architectures. They are significantly hampering the ongoing ability of an organization to deliver new capabilities while ensuring the integrity of artificial intelligence (AI) models.

Today's transactional data systems run between data warehouses and operational databases like Oracle, Microsoft SQL Server, or PostgreSQL. On the contrary, machine learning (ML) and analytics have usually occurred in data lakes or data warehouses. While this can indicate that the organization is on the right track, they can probably notice a rise in costs related to ETL (extract, transform and load), data access, and data management.

A recent MIT Technology Review survey stated that 47% of data executives are experiencing a reduction in duplicated data as the main factor of their data strategy initiatives. Approximately 50% of organizations are copying the transactional data system from the warehouse to the data lake. This has led to creating a negative impact due to the rising cost of data movements. The associated data latency, as well as the reliability implications, are also on the rise.

Read more: How are Organizations Modernizing their Data Security and Management?

Organizations can perform near real-time analysis on operational databases, such as SQL server-based databases, or non-SQL DB, such as Cosmos DB. Gartner Inc. defined this framework as hybrid transactional/analytical processing (HTAP). Many cloud providers are investing in tools to simplify this integration. In addition, if the organization has a data catalog, it can contain all the data in the data lake, allowing users to discover and use the data without needing IT resources.

Organizations can reduce their ETL (extract, transform and load) related costs as well as maximize the return on their investments by loading all of their data into the data lake. They can prepare the data based on the desired business logic and store it back for applications and report usage. This approach assists in identifying the data that the business requires without reinvesting in data ingestion, thus significantly impacting the current implementation.

But the current market dynamics do not allow for such slowdowns. Hence industry leaders are making use of technological innovations to upend the traditional data models, requiring laggards to reimagine the aspects of data architecture.

Understanding Data Management Architecture

Data architecture specialists are familiar with the emerging concept of data lakehouse and data mesh. While a data lakehouse refers to different formats of data storage, analysis, and queries, Data Mesh encompasses a series of concepts connected to data management in a decentralized and large-scale manner. These data architectures are enabling organizations to democratize the use of data within operations. It is also assisting them in managing data in a more flexible pattern than ever before.

Data Lakehouse

Data leaders are investing their time and effort to establish a unified platform to reduce their analytics infrastructure complexity as well as to promote collaboration across crucial roles like data engineers, data scientists, and business analysts. By doing so, they can reduce costs, operate on the data in a more efficient manner, focus on organizational challenges and adapt to ongoing changes.

A unified platform will also enable the data scientists to develop, deploy and operationalize their machine-learning models quickly. This approach will assist in enriching the organizational data with predictions, implying business analysts incorporate those into their Power BI reports, thus shifting the insights from descriptive to predictive.

Read more: The Rise of a Data-Driven Work Environment: What Do Enterprises Need to Know

Organizations are shifting to an easy-to-use experience where the data workload is purpose-built while being deeply integrated. The potential evolution of the data architecture forms the basis of the data lakehouse introduced. It proposes the idea that a single data lakehouse is capable of supporting machine learning and analytics in the same place while avoiding silos. With a single data lakehouse, organizations can reduce data duplication, thus facilitating a more effective usage of ML across their data operations.

Data Mesh

Data mesh is emerging as a preferred data strategy by organizations. A domain-driven analytical data architecture, data mesh, helps businesses in facilitating data democratization as data is becoming a company’s product, and data products have different data product patterns.

Data Mesh functions on a socio-technical paradigm that works on four principles:

-

Principle of Domain Ownership - decentralization and distribution of data responsibility to those who are closest to the data.

-

Principle of Data as a Product - the analytical data delivered is treated as a product, and the consumers are treated as customers.

-

Principal of the Self-serve Data Platform - self-serve data platform services that enable domains’ cross-functional teams to transfer data.

-

Principal of Federated Computational Governance - a decision-making model for data product owners and data platform product owners that provides them the autonomy as well as the domain-local decision-making power to create and adhere to a set of global rules.

For example: If a marketing business unit (BU) is looking to publish a data product that defines the company’s top-selling products. On the contrary, the operations BU also wanted to build demand-like models, considering the mentioned top-selling products data as input. With data mesh, the team will not have to transfer the data between business units and create a disconnected copy. Instead, they can just subscribe to the marketing data product and utilize it in their analysis.

Data mesh is likely to fit in every organization. It functions best for global and complex organizations that need to ensure that their business units are sharing data effectively while working independently on the data products.

Read more: What Is Data Democratization? How is it Accelerating Digital Businesses?

The Process of Implementation and Evolution

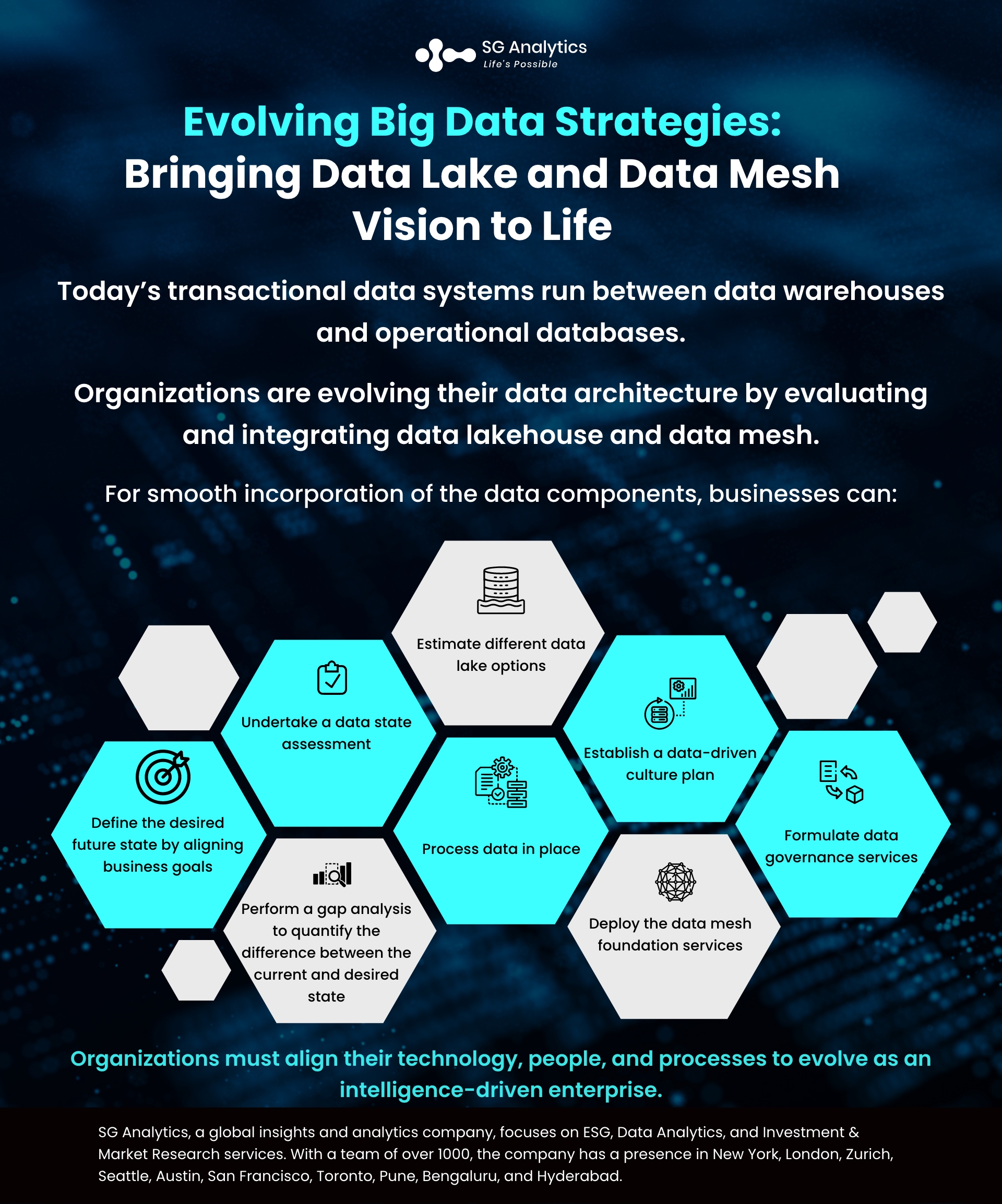

Implementing data lakehouse and data mesh as a part of the organization’s big data strategy is a journey. And organizations should embark on this journey without impacting their existing business operations. For smooth incorporation of these data components, businesses can undertake the following steps:

-

Defining the desired future state by aligning business goals, people development, and the evolution of processes.

-

Undertaking a data state assessment to identify the organization’s current state.

-

Performing a gap analysis to quantify the difference between the current and desired state, recognize opportunities, and design an actionable road map.

-

Estimating different data lake options and performing a pilot with a use case that involves data engineers and data scientists.

-

Processing data in place. Instead of employing a traditional ETL for data movement into a data lakehouse, businesses can opt for a TEL (transform, extract and load) to process the data within the distributed data store.

-

Establishing a data-driven culture plan that involves the board of directors as stakeholders.

-

Deploying the data mesh foundation services to create the first data products.

-

Formulating data governance services like data catalog, data usage detection, and data classification.

Key Highlights

-

Adaptive AI systems, data sharing, and data fabrics are the emerging trends that data and analytics leaders need to build on to drive resilience and innovation.

-

These data and analytics trends will authorize organizations to anticipate change and manage uncertainty.

-

Investing in trends most relevant to the organization can help in meeting the CEO’s priority of returning to and accelerating growth.

-

With data being the most critical and significant component of data architectures, organizations are easing into the idea of incorporating data components like data lakehouses and data mesh to build a sustainable data environment.

Read more: Data Fabric and Architecture: Decoding the Cloud Data Management Essentials

In Conclusion

As data, analytics, and AI are becoming more embedded in the day-to-day operations of most organizations; it can be clearly stated that a radically distinct approach to data architecture is necessary to create and grow data-centric enterprises. Data and technology leaders who are open to embracing this new approach will better position their organizations and lead them on the path to becoming agile, resilient, as well as competitive.

Organizations are now evolving their data architecture by evaluating and integrating data lakehouse as well as data mesh when required. To implement modern analytics and data governance at scale, organizations must align their technology, people, and processes to evolve as an intelligence-driven enterprise.

With a presence in New York, San Francisco, Austin, Seattle, Toronto, London, Zurich, Pune, Bengaluru, and Hyderabad, SG Analytics, a pioneer in Research and Analytics, offers tailor-made data services to enterprises worldwide.

A leader in Data Solutions, SG Analytics offers an in-depth domain knowledge and understanding of the underlying data with expertise in technology, data analytics, and automation. Contact us today if you are looking to understand the potential risks associated with data and develop effective data strategies and internal controls to avoid such risks.