As AI confronts the boundaries of traditional scaling, the focus shifts toward reasoning-driven approaches. This marks a defining moment in AI’s evolution with an emphasis on enhanced human-like problem-solving abilities without relying solely on expanding model sizes.

Over the past decade, the scaling hypothesis has driven AI advancements, suggesting that larger models, vast datasets, and increased computation power lead to consistent performance improvement. In 2019, Richard Sutton emphasized that leveraging larger computers and raw computational power consistently outperformed attempts to encode human expertise. A year later, OpenAI researchers empirically validated this hypothesis. The leap from GPT-3 to GPT-4 highlighted such gains, showcasing enhanced creativity and problem-solving capabilities.

Despite these advancements, scaling has begun to show diminishing returns. Models like Google’s Gemini have struggled to meet internal expectations, while Anthropic’s Claude faced delays in its release. “The 2010s were the age of wonder and discovery.” Ilya Sutskever, co-founder of Safe Superintelligence, stated in November 2024. Analysts observe that benchmarks are nearing saturation, and scaling yields only subtle progress, suggesting larger models will not be enough for a game-changing evolution in AI.

AI Scaling Constraints

As scaling laws dominate AI development, several barriers have emerged, particularly in data availability. Achieving human-level intelligence, such as writing scientific papers, would require training on approximately 10^35 Floating Operation Points, demanding 100,000 times more high-quality data than currently exists. While synthetic data offers a potential solution, it risks making models that amplify limitations acquired from their predecessors. This highlights the need for innovative approaches beyond scaling to advance AI capabilities.

The computational demands of scaling also pose significant challenges, with state-of-the-art models consuming energy equivalent to small cities. Future generations will demand even more energy, making this path unsustainable. Additionally, current architectures excel at interpolating within known data but fail at extrapolating to new scenarios. These limitations highlight that scaling alone cannot fill the architectural gaps in AI development.

Moving Beyond Scaling

As AI development reaches the limits of traditional scaling, the focus shifts toward reasoning-focused methods to enhance performance. These approaches aim to replicate human-like analytical abilities, allowing models to deeply assess tasks, evaluate outcomes, and make informed decisions in unfamiliar scenarios. OpenAI’s chief, Sam Altman, suggested that AGI (Artificial General Intelligence) is closer than expected and will likely emerge in 2025, emphasizing the relevance of advanced reasoning. AI systems utilize adaptive processing techniques to tackle complex problems that pre-trained models cannot solve, prioritizing critical analysis over sheer computational power.

The OpenAI’s o1 model

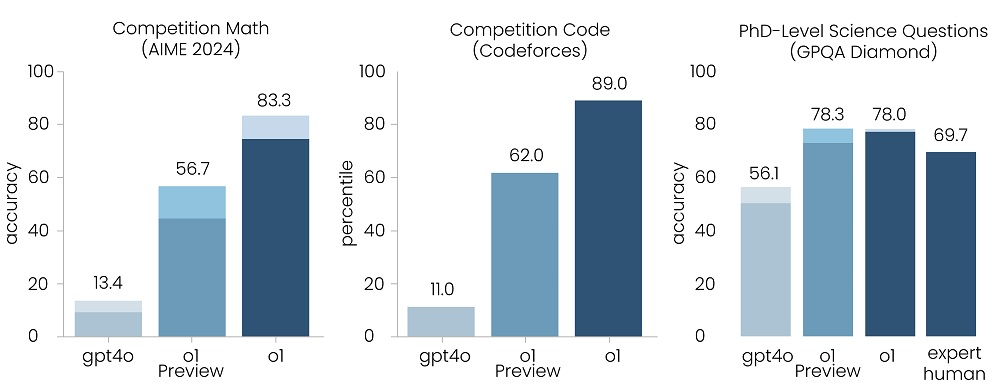

OpenAI’s o1 model, previously known as Strawberry, represents a major step forward in AI reasoning by focusing on inference-time computing, enabling models to stop and think before responding. OpenAI researcher Noam Brown highlighted that 20 seconds of inference reasoning is expected to match the performance of scaling a model 100,000 times. This approach allowed o1 to surpass GPT-4 on benchmarks like the 2024 MME exam, achieving a 93% score and placing among the top 500 US math students. It also outperformed GPT-4 on 54 of 57 MMLU subcategories.

Figure: o1 outperforms GPT-4o on a diverse set of human exams

Source: OpenAI o1 technical report, as of September 12, 2024

The o1 model demonstrates its advanced reasoning capabilities through backtracking and a chain of thought processes, enabling multi-step problem-solving and self-correction. This approach allows the model to revisit and refine its reasoning, much like human decision-making. OpenAI’s rivals, including Google DeepMind and Anthropic, are exploring similar methods to enhance their model's reasoning capabilities, pushing beyond the limitations of traditional scaling approaches.

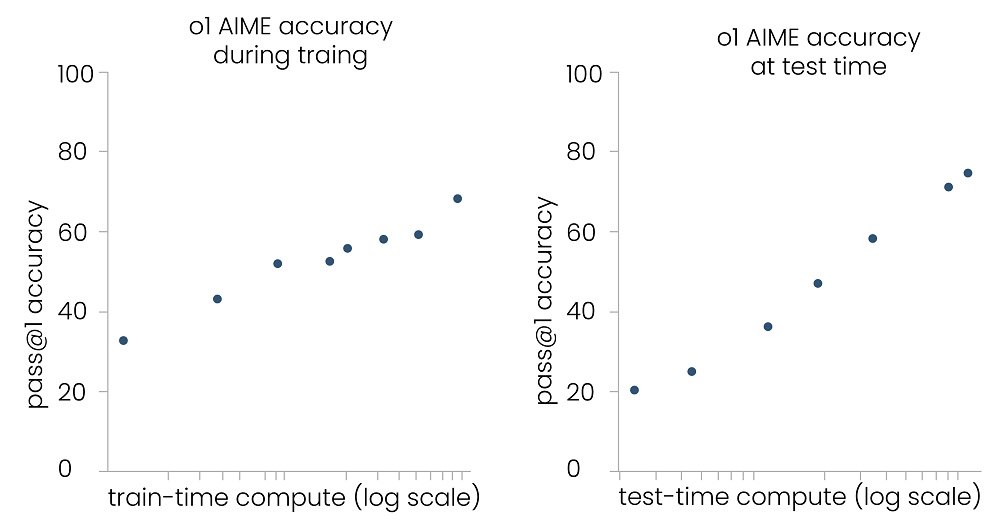

Figure: o1’s performance improves with both train-time and test-time compute

Source: OpenAI o1 technical report, as of September 12, 2024

Hurdles in AI Reasoning

AI reasoning struggles with complex problems that demand genuine understanding rather than pattern matching. Transparency issues in the o1 model exacerbate this situation, as its opaque self-verification process prevents users from examining the reasoning steps, particularly in vital areas such as legal analysis or healthcare, thereby increasing the risk of misleading outputs. A September 2024 report by OpenAI raised concerns about the o1 model’s risk of misuse, including deceptive responses and exploitation by cybercriminals, emphasizing the urgent need for robust safeguards.

The Path Forward

The AI stands at a pivotal moment. Even if the AI models become more affordable and equipped with massive computing power, their ability to solve complex problems like automating mathematics, science, and technology research remains uncertain. NVIDIA anticipates a shift towards the inference chip market as the training chip demand slows, opening opportunities for competitors to challenge its leadership in the AI chip market. Moreover, businesses seek innovative applications for AI’s reasoning to address future needs. Overall, a significant breakthrough akin to ChatGPT is crucial to redefining AI’s trajectory.

Conclusion

AI enters a new era of discovery, with advancements in test-time computing, resource optimization, and human-like resolution techniques influencing its course. By balancing scalability with efficiency, these innovations promise more capable systems that excel in complex tasks without the high costs associated with larger models. This evolution reflects a broader shift toward smarter, more adaptable AI, paving the way for transformative progress across various domains.

Partner of choice for lower middle market-focused investment banks and private equity firms, SG Analytics provides offshore analysts with support across the deal life cycle. Our complimentary access to a full back-office research ecosystem (database access, graphics team, sector & and domain experts, and technology-driven automation of tactical processes) positions our clients to win more deal mandates and execute these deals in the most efficient manner.

About SG Analytics

SG Analytics (SGA) is an industry-leading global data solutions firm providing data-centric research and contextual analytics services to its clients, including Fortune 500 companies, across BFSI, Technology, Media & Entertainment, and Healthcare sectors. Established in 2007, SG Analytics is a Great Place to Work® (GPTW) certified company with a team of over 1200 employees and a presence across the U.S.A., the UK, Switzerland, Poland, and India.

Apart from being recognized by reputed firms such as Gartner, Everest Group, and ISG, SGA has been featured in the elite Deloitte Technology Fast 50 India 2023 and APAC 2024 High Growth Companies by the Financial Times & Statista.