Artificial intelligence is consistently trying to make our lives function effortlessly every day. From logistics optimization, fraud detection, research, art composition, to virtual assistants providing personalized experiences, our lives are being transformed by AI for the better. Advanced data analytics, powered by ML and AI, is being utilized by companies to make crucial business decisions. The AI systems completely rely on algorithms, which are designed to help the machine sense and understand its environment, take inputs, process those inputs to provide a solution, perform risk assessment, predict future trends, etc.

During the previous era, when AI was still fairly new, systems based on it purely functioned according to the programs written by humans. But, as the technology has advanced, AI systems today are capable of ‘learning’ from the manually fed data and the readings collected from its surroundings. The machine learns on its own hence paving way for the machine learning generation, wherein the system doesn’t need to be programmed explicitly. The hyper-growth strategies of achieving new heights in the field of AI development have resulted in questions on the ethics in AI.

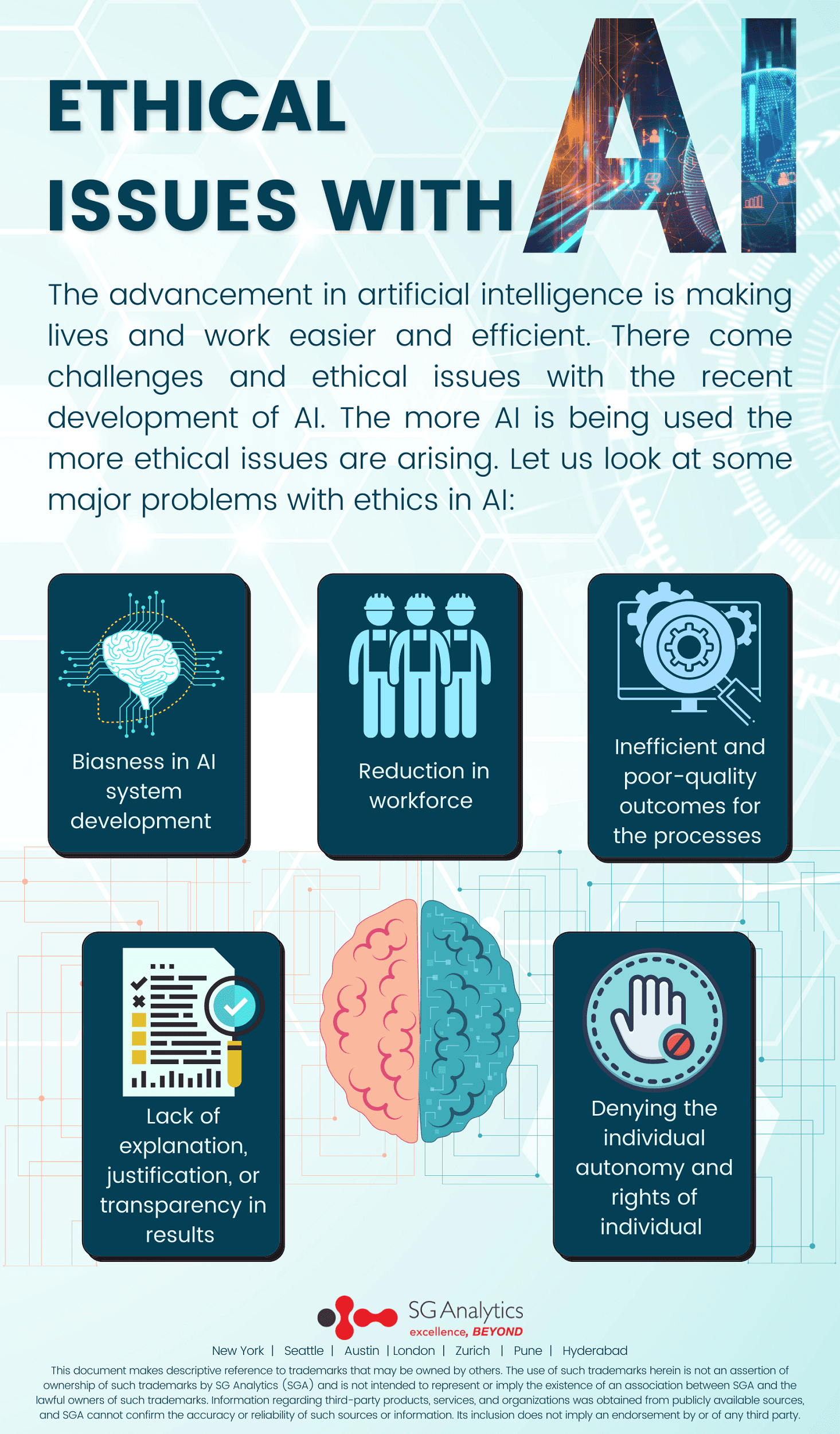

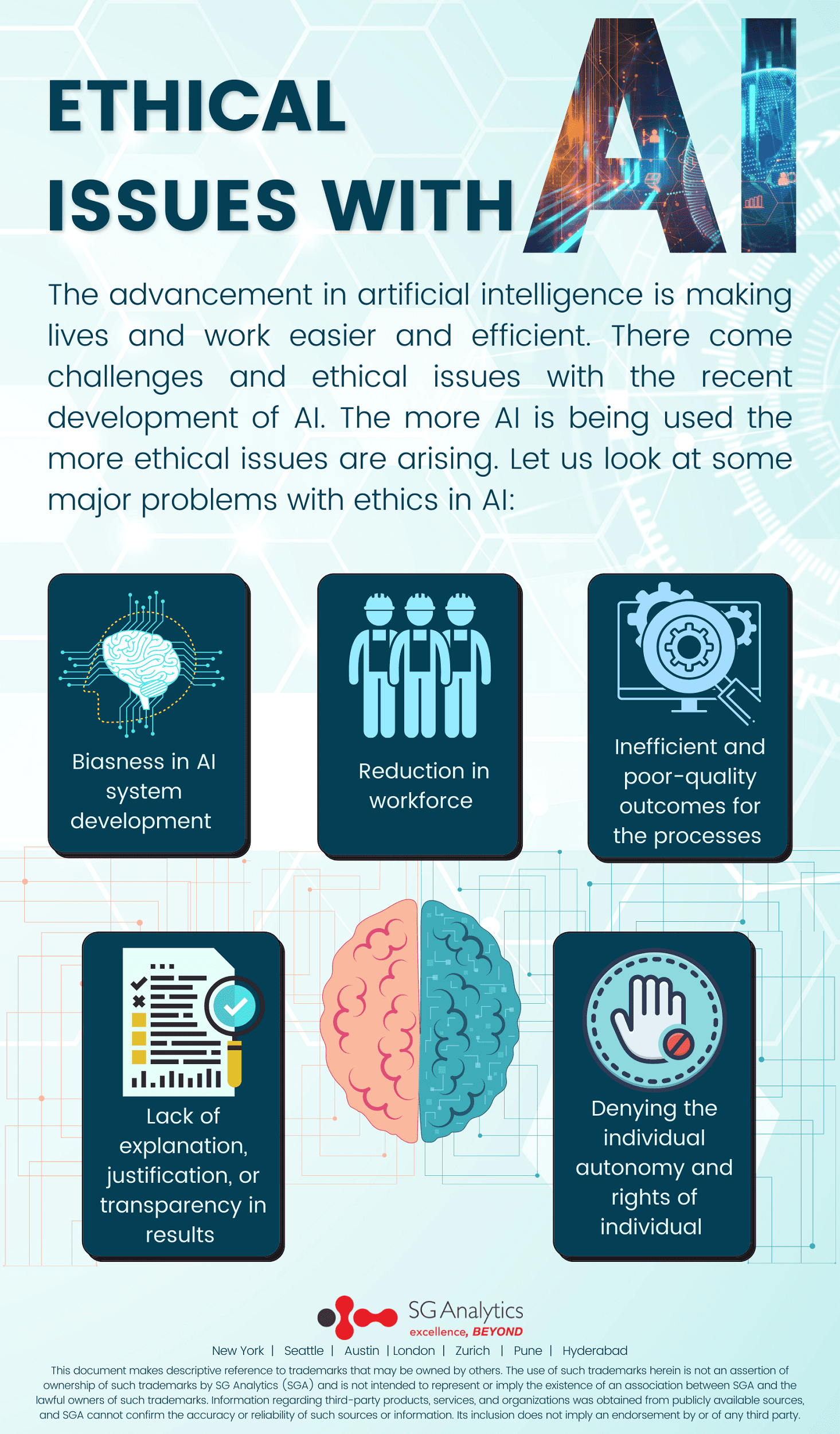

Ethics in Artificial Intelligence

In Tempe, Arizona, USA, a woman riding a bicycle was killed by a self-driving car. Even though there was a human behind the wheels, the AI system was fully in control of the car. Such incidents, involving human and AI interactions, raise a series of ethical questions and addressing ethical issues surrounding the use of AI. Questions like, who was responsible for the woman’s death? Was it the human behind the wheel? Manufacturer of the self-driving car? Or perhaps the AI system designers? The curious case of who is morally correct remains a mystery. This raises another question about the rules that are guiding modern AI systems in becoming moral and ethical. Let us have a look at some common ethical issues in AI:

Bias in AI

An example of bias by AI: In 2014, a recruiting tool was developed by Amazon for identifying the right candidate for the role of a software engineer. As the system learned from the data it was fed, it swiftly started discriminating against women. The company had to abandon the system in 2017. Irrespective of the algorithm on which the AI is running, the initial stages of the development as well as the concepts on which its development is based, the data it has been fed can make the outcomes biased and influenced by the designer of the AI.

Lack of explanation, justification, or transparent outcomes

Machine learning models generate results using high-dimensional correlations, which are algorithmically produced. The results may be opaque to the use cases and can cause trouble thanks to an improper explanation.

Inefficient and poor-quality results

AI systems based on data managed poorly, or data of poor quality, in general, will result in unreliable and unsafe results. Such an AI could do more harm than good.

AI makes decisions, predictions, and classifications that can prove to be a matter of life and death. And hence such decisions, predictions, and classifications ought to be transparent. The problem is, they often are not. AI systems can be so frustratingly sophisticated that they are virtually black boxes. We don’t want to make key decisions without knowing why.

Reduction in workforce

AI will soon become fairly capable of performing sophisticated cognitive tasks such as driving, programming, and communicating. Consequently, it will become a threat for workers who are earning their living by performing monotonous and repetitive tasks, tasks that can be easily automated. AI is now used in a variety of fields, providing its expertise in healthcare, transport, data entry, customer service, etc.

Ethical issues in AI

The advent of AI also poses threats to humanity in terms of employment. People can’t deny the anxiousness built up by the thought that AI will someday snatch their jobs. For example, if self-driving trucks get a go-ahead for commercialization, as promised by Elon Musk, CEO of Tesla, hundreds of trucks and lorry drivers will lose their jobs. The same scenario is also projected to occur in the corporate sector, where AI and machine learning are already being implemented to handle administrative tasks.